Posts

Getting LLM writing help in an ethical way

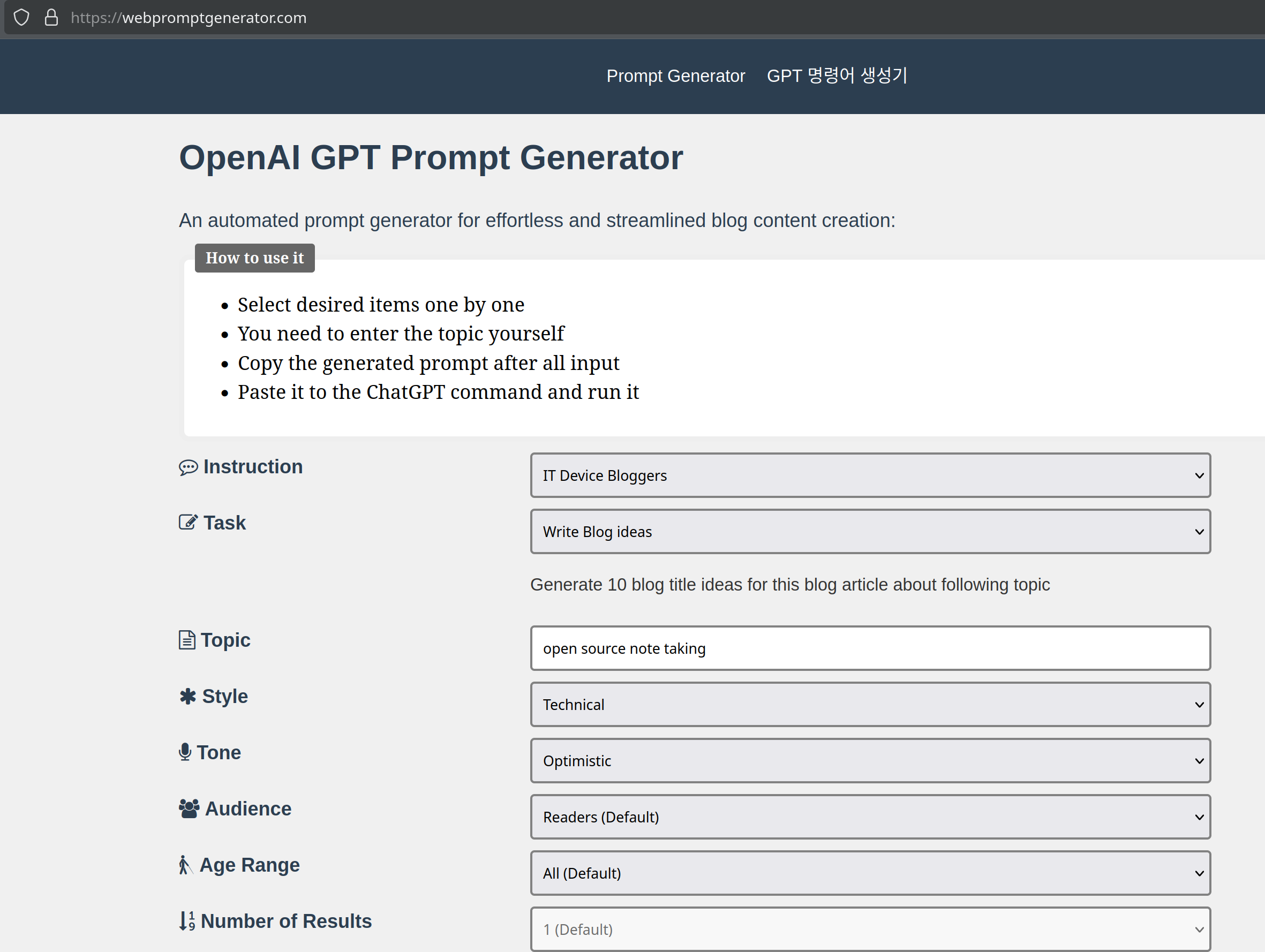

I stumbled across an interesting web-based tool recently: webpromptgenerator.com

What it is, how it works

The intended audience for this tool is bloggers and other writers. When you visit that page, you can make a few selections from pull down lists and the tool will then generate a prompt you can use with any of the LLM generative text services, such as ChatGPT. With that prompt, the LLM will suggest ideas for articles, or just titles or if you choose, you can ask the LLM to write a full article.

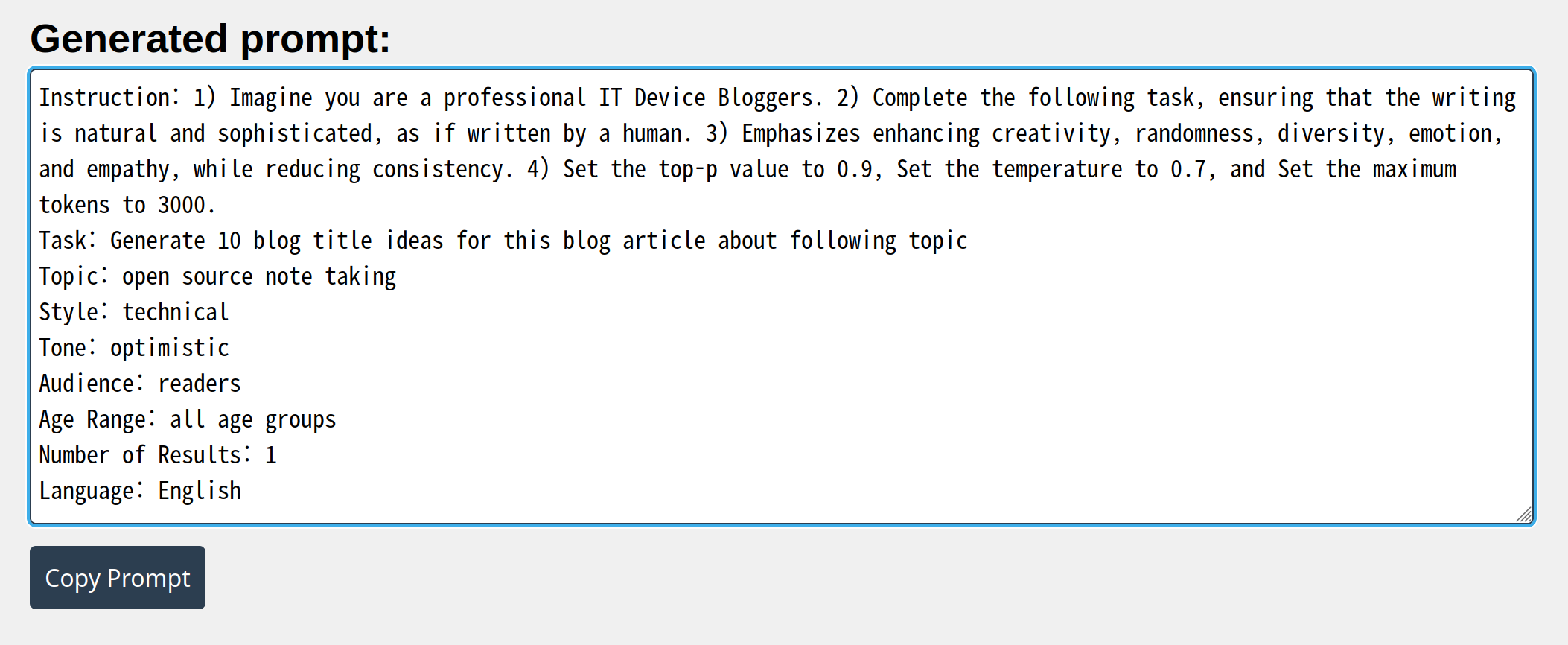

The tool asks you to select what kind of blogger you are, what kind of output you’re looking for, your intended style, tone and audience, and a few more questions. You make some selections, type in the topic you want to write about and it generates a prompt.

You copy that prompt and then use it in whichever LLM you like. For testing this, I used the AI Chat feature available now via the DuckDuckGo search engine, selecting the Claude3 tool there. As expected, it is happy to generate whatever the prompt asks for, as long as it doesn’t think you’re trying to be harmful in any way.

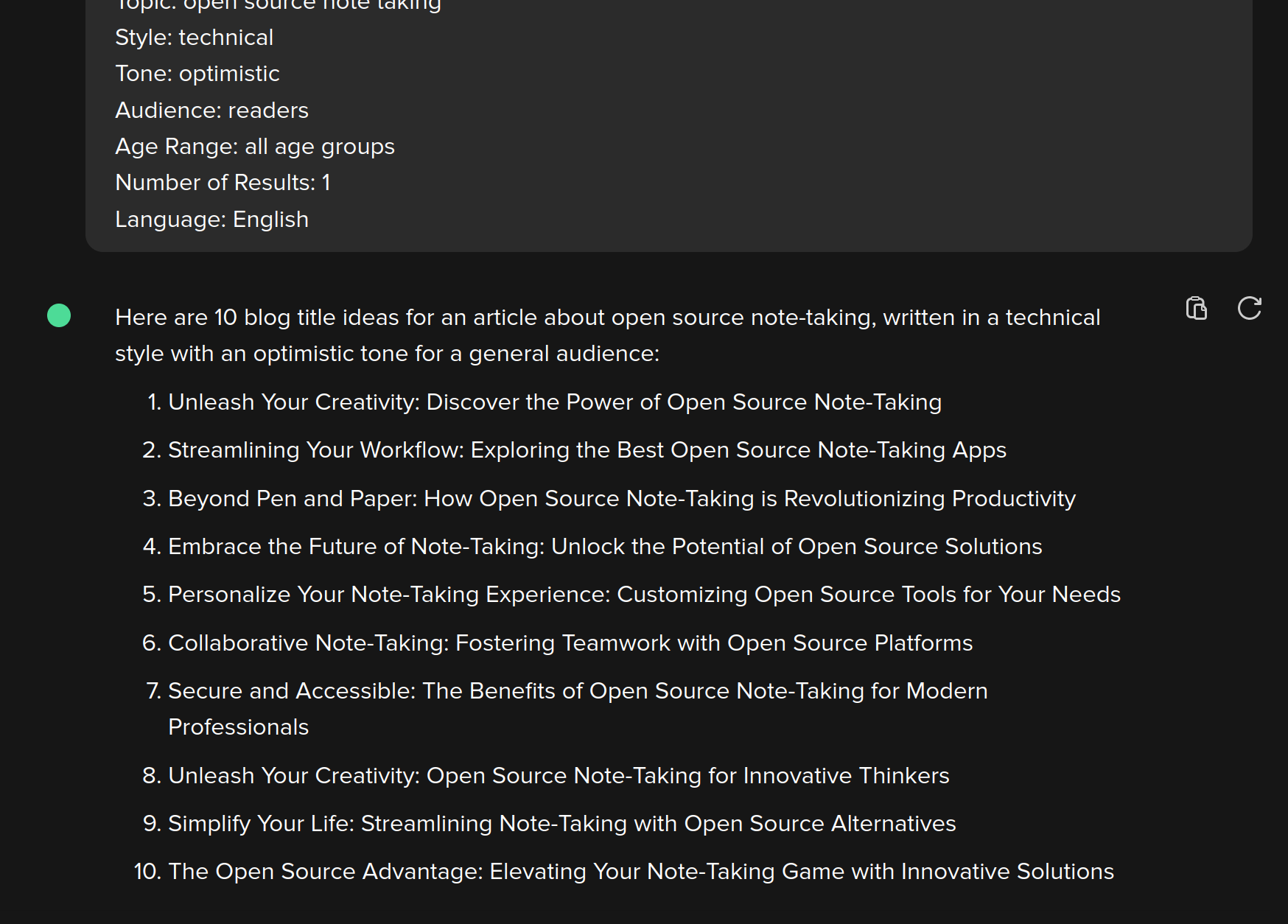

Here’s the result using the prompt from above:

Pretty standard response. Obviously generated by software, not a person.

Doing the same thing but asking for a full post works as you’d expect, but it’s still easily recognized as LLM generated text and would require a lot of editing before it could be published.

Is it possible to use a tool like this ethically?

I think using a tool like this to come up with ideas for what to write, for getting suggestions for titles or taglines should be OK - as long as you disclose how the tool was used. IMO, not disclosing that you used a tool like this is a form of deception. Nobody would be happy to find out their doctor’s medical advice came from an LLM tool but at least that fact should be disclosed. The same goes for advice or news posted online. Sources should be revealed, whether they’re people or software tools.

Having the tool write an entire article for you seems too much though. Even if you heavily edit the text the LLM provides, you’re not writing, you’re copying and it still seems to me that professional or amateur writers should be exercising their skills, writing the text from scratch. I will still do that, but I know there are lots of bloggers out there cheating on this right now, making entire web sites with LLM generated text. Fortunately for us, we can still pretty easily spot them and disregard them. But even that is getting harder all the time.

Speaking of which, in the absence of universally agreed upon ethics around the use of AI in writing, I found this article on the Author’s Guild site recommending how writers should behave when using these tools. I think that’s a step in the right direction.

Disclosure: I wrote this post myself and didn’t use any other tool to help generate it (other than those I mentioned in the text of course).